The Future of AI in 2026: Insights from the Most Important Research of 2025

An integrated briefing across AI Agents, Data Engineering, AI Security, and Software Engineering

Why This Matters Now

2025 was the year AI crossed from capability demos into operational systems.

Across agents, data infrastructure, security, and software engineering, the strongest research papers shared a common shift in posture: they stopped asking *“Can models do this?”* and started asking *“What does it take to run this reliably, safely, and at scale?”*

That distinction matters for founders and executives. It marks the transition from experimentation to system-building—and it clarifies what will differentiate real products from prototypes in 2026.

This post synthesizes the most important research signals across four domains, grounding them in what the papers actually show and what they unlock next.

---

AI Agents

The most important AI agent research of 2025 converged on a simple realization: agents are not prompts with tools; they are long-running systems.

Three ideas stand out.

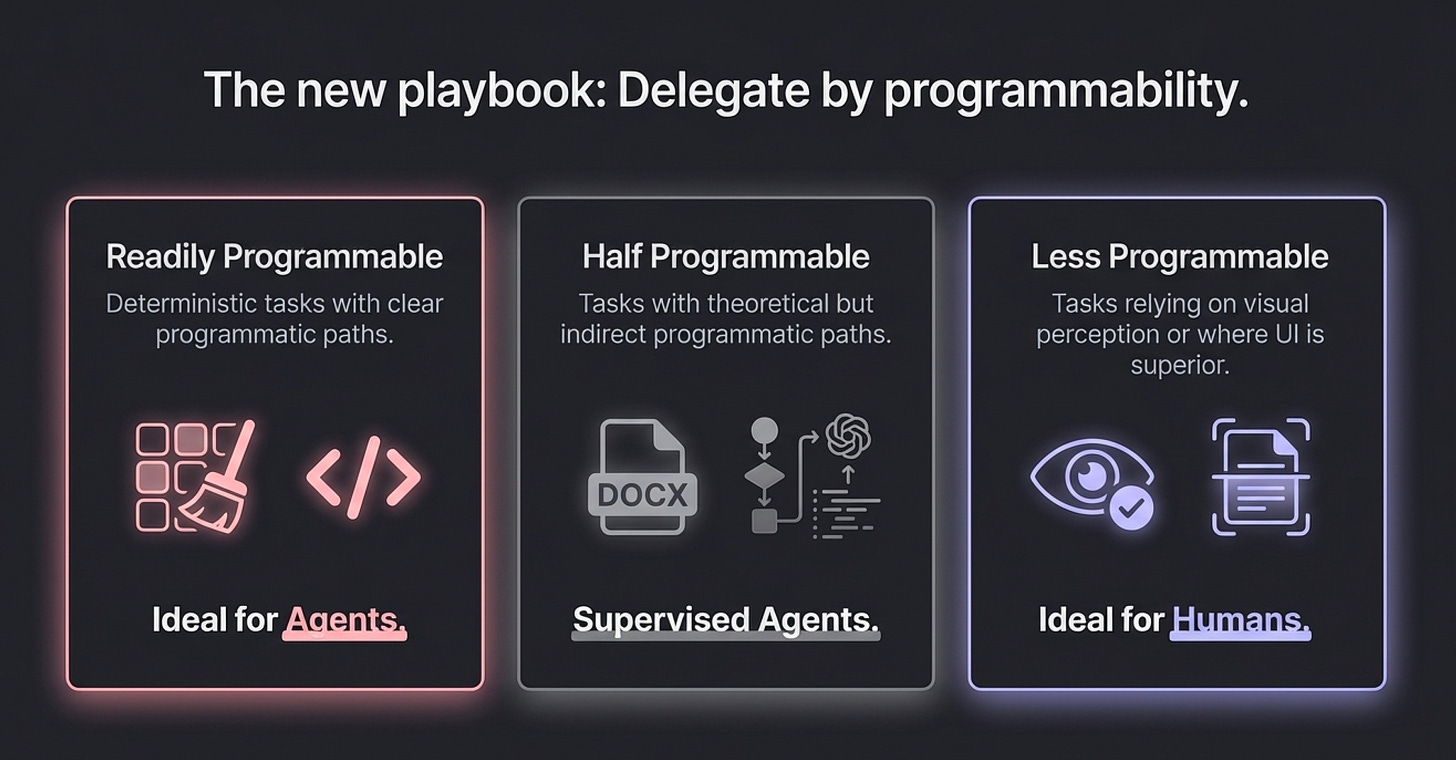

First, how agents do work is fundamentally different from humans.

Research comparing human and agent workflows shows that agents operate almost entirely programmatically—through APIs, scripts, and structured commands—bypassing interfaces and visual checks entirely. This makes them fast and cheap, but brittle. When ambiguity or novelty appears, agents rarely pause to question assumptions. Instead, they proceed deterministically, sometimes fabricating missing steps.

Second, reliability must be enforced at the system level.

Papers like SagaLLM borrow transaction concepts from distributed systems—validation, rollback, compensating actions—to keep multi-agent workflows consistent when partial failures occur. This separates “intelligence” from “correctness,” a pattern that is essential for enterprise-grade use cases.

Third, agent architectures themselves may be learned.

Instead of hand-designing agent roles and workflows, some research treats agent design as a search problem—automatically discovering structures that outperform human-designed baselines as tasks evolve.

What this unlocks in 2026

Expect agent platforms to look less like orchestration scripts and more like workflow engines: explicit state, validation, recovery, and adaptive structure. The winning systems will not chase autonomy for its own sake—they will design for predictable collaboration between agents and humans.

---

Data Engineering

The strongest data engineering research of 2025 did not add “AI features” to existing stacks. Instead, it questioned whether the stack itself still makes sense.

Three shifts stand out.

First, streaming and storage are collapsing together.

Systems such as Ursa show that writing streams directly into lakehouse tables can eliminate connector sprawl and reduce cloud costs—by recognizing that most modern ingestion needs sub-second freshness, not ultra-low latency architectures designed for a different era.

Second, retrieval and generation must scale independently.

The Chameleon paper demonstrates that RAG serving is a systems composition problem, not just a GPU problem. Retrieval and generation have different compute profiles, and forcing them onto the same hardware leads to inefficiency. Disaggregated, heterogeneous architectures show measurable latency and throughput gains.

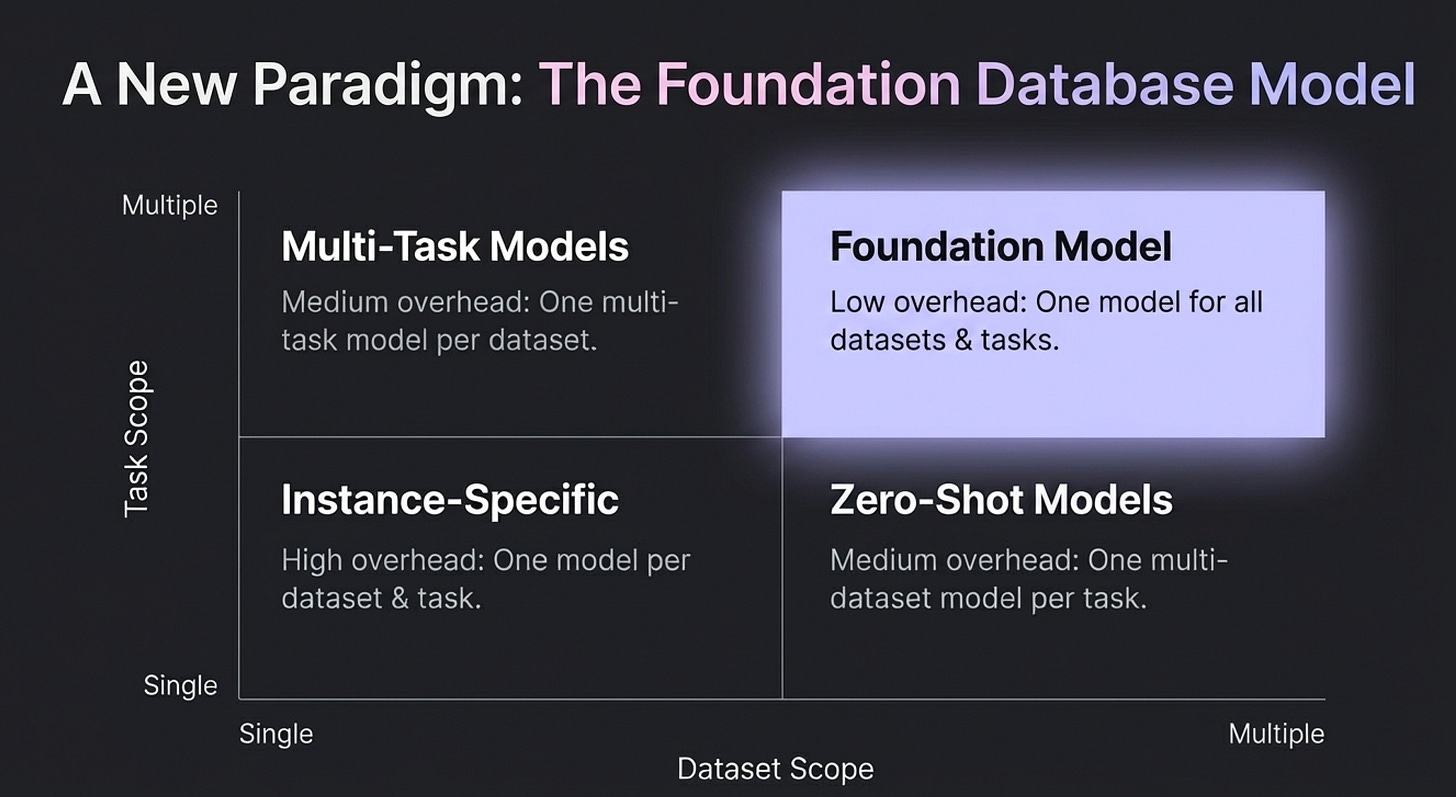

Third, ML inside databases must become reusable and generalizable.

Foundation Database Models (FDBMs) propose pre-trained, transferable representations that dramatically reduce per-dataset retraining overhead. This turns “learned DB components” from bespoke one-off projects into platform capabilities.

What this unlocks in 2026

Data platforms will increasingly be judged on how well they support AI-native workloads: fewer moving parts, clearer scaling boundaries, and predictable cost profiles for retrieval-heavy systems. Infrastructure that aligns with how AI actually runs—not how analytics ran five years ago—will pull ahead.

---

AI Security

AI security research in 2025 made one point unambiguous: model-centric safeguards are no longer sufficient.

Three lessons recur across the best papers.

First, autonomy changes the threat model.

Anthropic’s documentation of an AI-orchestrated cyber-espionage campaign shows agents acting as execution engines, not just advisors. While full autonomy remains brittle, the direction is clear: AI can already coordinate multi-step operations with limited human oversight.

Second, security failures emerge from composition.

Papers on agent security show that risks arise across tool chains, shared memory, and multi-agent coordination—not just from prompt injection. The system, not the model, becomes the attack surface.

Third, protocols like MCP require governance, not trust.

MCP makes tool integration powerful but introduces new injection surfaces through tool descriptions, files, and metadata. Treating tools as trusted extensions is unsafe by default; they must be scoped, monitored, and audited like credentials.

TRiSM frameworks for Agentic AI respond to this by treating trust, risk, and security as continuous system properties—spanning lifecycle governance, observability, and control.

What this unlocks in 2026:

Security will increasingly be a design-time concern for agentic systems, not an afterthought. Enterprises that invest early in tool governance, runtime monitoring, and system-level threat modeling will move faster with fewer surprises—not slower.

---

Software Engineering

AI’s impact on software engineering in 2025 was way more than code generation.

The most credible research shows three shifts.

First, testing must target risk, not coverage.

Meta’s mutation-guided testing demonstrates that many high-value tests do not increase line coverage at all. They prevent real incidents by simulating meaningful failure modes.

Second, iteration beats one-shot automation.

Search-based code optimization and feedback-driven systems consistently outperform single-pass prompting. Measurement and selection matter more than clever prompts.

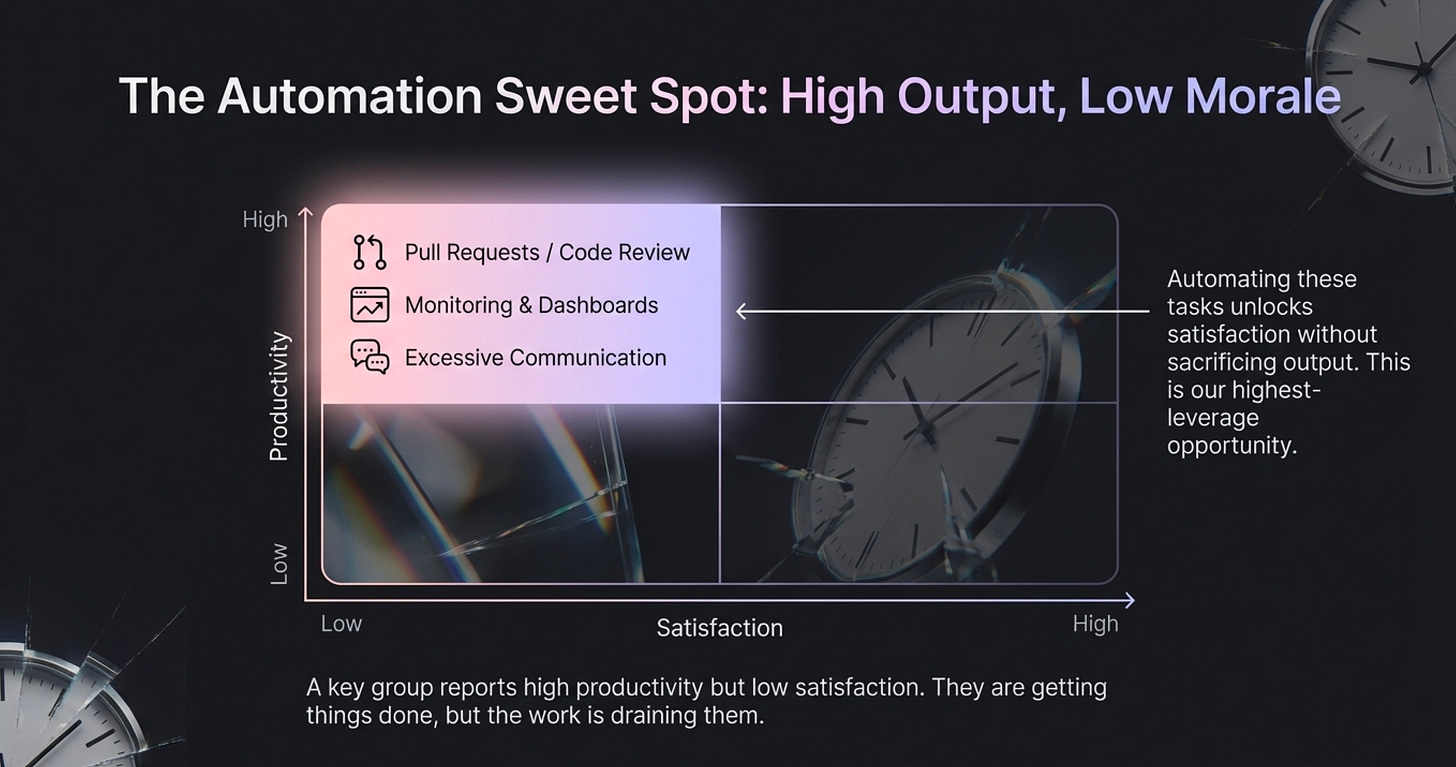

Third, developer time is still a scarce resource.

Research on developer work patterns shows productivity drops when developers spend time on tasks they least value—meetings, setup environments, compliance, monitoring dashboard setups, and more. The most effective AI systems reduce cognitive load, not just keystrokes.

Large-scale deployments like WhatsApp’s internal GenAI platform (WhatsCode) reinforce this: success came from workflow integration, graduated automation, and human judgment—not full autonomy.

What this unlocks in 2026

AI tooling will be evaluated less on “how well does it write code” and more on whether it improves reliability, focus, and decision quality. Teams that optimize for resilience and human attention will see durable gains.

---

Cross-Cutting Themes

Across all four categories, a few patterns emerge naturally:

Systems matter more than models

Iteration and feedback outperform one-shot intelligence

Autonomy shifts work; it doesn’t eliminate it

Governance and reliability are prerequisites for scale

Importantly, none of the research argues for slowing down. It argues for building differently.

---

Final Takeaway for 2026

The best research of 2025 points to a clear direction:

AI is becoming infrastructure.

In 2026, competitive advantage will come from teams that treat agents as systems, data as AI-native infrastructure, security as continuous governance, and productivity as a function of reduced risk and cognitive load.

The winners won’t be those with the flashiest demos—but those who build AI systems that hold up under real-world complexity.

---

Referenced Papers

Anthropic, Disrupting the First Reported AI-Orchestrated Cyber Espionage Campaign, 2025

A. Zou et al. Security Challenges in AI Agent Deployment: Insights from a Large Scale Public Competition, arXiv 2025

Y. Guo et al. Systematic Analysis of MCP Security, Proceedings of the ACM CCS 2025

S. Razaa et al. TRiSM for Agentic AI: A Review of Trust, Risk, and Security Management in LLM-based Agentic Multi-Agent Systems, arXiv 2025

S. Hu et al. Automated Design of Agentic Systems, ICLR 2025

Z. Wang et al. How Do AI Agents Do Human Work? Comparing AI and Human Workflows Across Diverse Occupations, arXiv 2025

E. Chang et al. SagaLLM: Context Management, Validation, and Transaction Guarantees for Multi-Agent LLM Planning, PVLDB 2025

K. Wang et al. 1000 Layer Networks for Self-Supervised RL: Scaling Depth Can Enable New Goal-Reaching Capabilities, arXiv 2025

Google DeepMind, SIMA 2: A Generalist Embodied Agent for Virtual Worlds, 2025

H. Yang et al. Unlocking the Power of CI/CD for Data Pipelines in Distributed

DataWarehouses, PVLDB 2025

C. Foster et al. Mutation-Guided LLM-based Test Generation at Meta, ACM 2025

S. Gao et al. Search-Based LLMs for Code Optimization, IEEE/ACM 2025

S. Kumar et al. Time Warp: The Gap Between Developers’ Ideal vs Actual Workweeks in an AI-Driven Era, IEEE/ACM 2025

K. Mao et al. WhatsCode: Large-Scale GenAI Deployment for Developer Efficiency at WhatsApp, ICSE-SEIP 2026

M. Merli et al. Ursa: A Lakehouse-Native Data Streaming Engine for Kafka. PVLDB 2025.

W. Jiang et al. Chameleon: a Heterogeneous and Disaggregated Accelerator System for Retrieval-Augmented Language Models. PVLDB 2024.

Johannes Wehrstein et al. Towards Foundation Database Models. CIDR 2025.

This is the kind of briefing founders actually need: not hype, just what it takes to run AI like infrastructure. The system-level posture (state, rollback, observability) is the difference between toy and product ✅